Hackworth

- 0 Posts

- 20 Comments

12·9 days ago

12·9 days agoHere’s the 60 Minutes piece and Anthropic’s June article about the one in their own office.

Claudius was cajoled via Slack messages into providing numerous discount codes and let many other people reduce their quoted prices ex post based on those discounts. It even gave away some items, ranging from a bag of chips to a tungsten cube, for free.

Their article on this trial has some more details too.

221·9 days ago

221·9 days agoThat was all part of the idea, though, because Anthropic had designed this test as a stress test to begin with. Previous runs in their own office had indicated similar concerns.

92·10 days ago

92·10 days agoHere’s a metaphor/framework I’ve found useful but am trying to refine, so feedback welcome.

Visualize the deforming rubber sheet model commonly used to depict masses distorting spacetime. Your goal is to roll a ball onto the sheet from one side such that it rolls into a stable or slowly decaying orbit of a specific mass. You begin aiming for a mass on the outer perimeter of the sheet. But with each roll, you must aim for a mass further toward the center. The longer you roll, the more masses sit between you and your goal, to be rolled past or slingshot-ed around. As soon as you fail to hit a goal, you lose. But you can continue to play indefinitely.

The model’s latent space is the sheet. The way the prompt is worded is your aiming/rolling of the ball. The response is the path the ball takes. And the good (useful, correct, original, whatever your goal was) response/inference is the path that becomes an orbit of the mass you’re aiming for. As the context window grows, the path becomes more constrained, and there are more pitfalls the model can fall into. Until you lose, there’s a phase transition, and the model starts going way off the rails. This phase transition was formalized mathematically in this paper from August.

The masses are attractors that have been studied at different levels of abstraction. And the metaphor/framework seems to work at different levels as well, as if the deformed rubber sheet is a fractal with self-similarity across scale.

One level up: the sheet becomes the trained alignment, the masses become potential roles the LLM can play, and the rolled ball is the RLHF or fine-tuning. So we see the same kind of phase transition in prompting (from useful to hallucinatory), in pre-training (poisoned training data), and in post-training (switching roles/alignments).

Two levels down: the sheet becomes the neuron architecture, the masses become potential next words, and the rolled ball is the transformer process.

In reality, the rubber sheet has like 40,000 dimensions, and I’m sure a ton is lost in the reduction.

191·12 days ago

191·12 days agoThere’s a lot of research around this. So, LLM’s go through phase transitions when they reach the thresholds described in Multispin Physics of AI Tipping Points and Hallucinations. That’s more about predicting the transitions between helpful and hallucination within regular prompting contexts. But we see similar phase transitions between roles and behaviors in fine-tuning presented in Weird Generalization and Inductive Backdoors: New Ways to Corrupt LLMs.

This may be related to attractor states that we’re starting to catalog in the LLM’s latent/semantic space. It seems like the underlying topology contains semi-stable “roles” (attractors) that the LLM generations fall into (or are pushed into in the case of the previous papers).

Unveiling Attractor Cycles in Large Language Models

Mapping Claude’s Spirtual Bliss Attractor

The math is all beyond me, but as I understand it, some of these attractors are stable across models and languages. We do, at least, know that there are some shared dynamics that arise from the nature of compressing and communicating information.

Emergence of Zipf’s law in the evolution of communication

But the specific topology of each model is likely some combination of the emergent properties of information/entropy laws, the transformer architecture itself, language similarities, and the similarities in training data sets.

121·13 days ago

121·13 days agoKrita should letcha set the white point in the levels tool. But it won’t letcha pick white with the eyedropper, which is a notable omission.

434·13 days ago

434·13 days agoOf the 2, I’ve come to prefer Krita. Acly replaces most of Photoshop’s generative tools cleanly and improves upon them with features like pose vectors and live mode.

10·13 days ago

10·13 days agoPreach. My key bindings followed me from Avid to FCP to Premiere. Still hittin H for RaHzor.

12·14 days ago

12·14 days agoAre they expecting AI to… make decisions regarding tenants? I wonder if they are aware of universal prompt injection.

3·14 days ago

3·14 days agoCopilot is just an implementation of GPT. Claude’s the other main one, at least as far as performance goes.

12·21 days ago

12·21 days agoWestworld

2·1 month ago

2·1 month agoI guess those scientist guys all working on A.I. never gave cocaine and Monster Energy a try.

3·2 months ago

3·2 months agoEmpathize as in understand motivations and perspectives: 8

With some effort to communicate, I can usually understand how someone got where they are. It’s important to me to understand as many ways of being as possible. It’s my job to understand people, but the bigger motivation is that it bugs me if I don’t understand the root of a disagreement. Of course, this doesn’t mean I condone their perspective, believe it’s healthy/logical, or would recommend it wholesale to others.

1532·2 months ago

1532·2 months ago

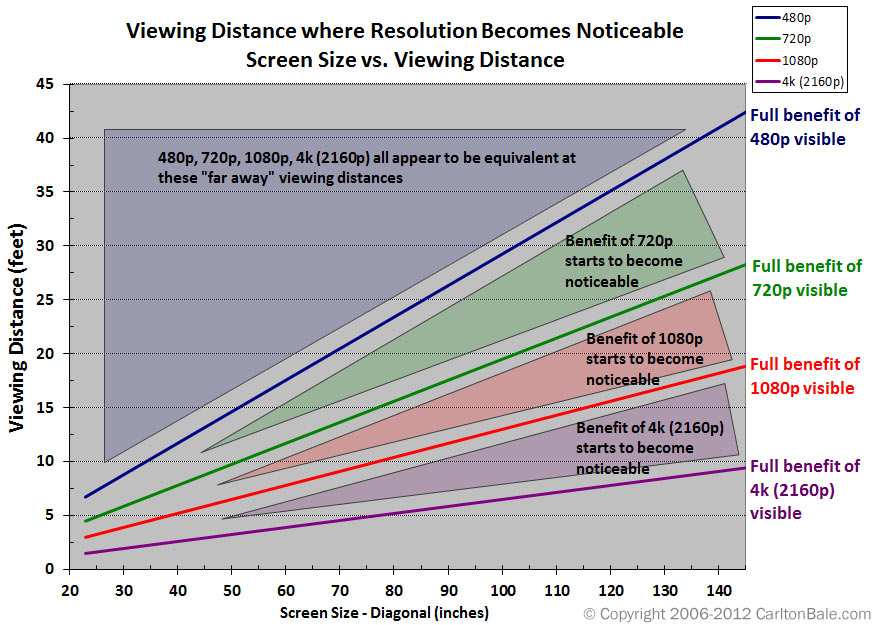

I can pretty confidently say that 4k is noticeable if you’re sitting close to a big tv. I don’t know that 8k would ever really be noticeable, unless the screen is strapped to your face, a la VR. For most cases, 1080p is fine, and there are other factors that start to matter way more than resolution after HD. Bit-rate, compression type, dynamic range, etc.

31·2 months ago

31·2 months agoThe fact that workers with expense accounts still feel they’re getting paid so little that they deserve to commit fraud says something about that stratum of employee.

Pretty much anyone who travels has to submit receipts. Most people who travel are not making bank. They’re the people who set up and stand at convention booths, sales staff support, assistants, videographers, etc. Also, most travel is a miserable ordeal. I’m not saying it’s okay to commit fraud, but let’s not equate the hourly employee “re-creating” his lost lunch receipt with a 6-figure income.

3·2 months ago

3·2 months agoHey! An excuse to quote my namesake.

Hackworth got all the news that was appropriate to his situation in life, plus a few optional services: the latest from his favorite cartoonists and columnists around the world; the clippings on various peculiar crackpot subjects forwarded to him by his father […] A gentleman of higher rank and more far-reaching responsibilities would probably get different information written in a different way, and the top stratum of New Chuasan actually got the Times on paper, printed out by a big antique press […] Now nanotechnology had made nearly anything possible, and so the cultural role in deciding what should be done with it had become far more important than imagining what could be done with it. One of the insights of the Victorian Revivial was that it was not necessarily a good thing for everyone to read a completely different newspaper in the morning; so the higher one rose in society, the more similar one’s Times became to one’s peers’. - The Diamond Age by Neal Stephenson (1995)

That is to say, I agree that everyone getting different answers is an issue, and it’s been a growing problem for decades. AI’s turbo-charged it, for sure. If I want, I can just have it yes-man me all day long.

32·2 months ago

32·2 months agoEh, people said the exact same thing about Wikipedia in the early 2000’s. A group of randos on the internet is going to “crowd source” truth? Absurd! And the answer to that was always, “You can check the source to make sure it says what they say it says.” If you’re still checking Wikipedia sources, then you’re going to check the sources AI provides as well. All that changes about the process is how you get the list of primary sources. I don’t mind AI as a method of finding sources.

The greater issue is that people rarely check primary sources. And even when they do, the general level of education needed to read and understand those sources is a somewhat high bar. And the even greater issue is that AI-generated half-truths are currently mucking up primary sources. Add to that intentional falsehoods from governments and corporations, and it already seems significantly more difficult to get to the real data on anything post-2020.

<Three Spider-Men Point>