Aah my bad… I was half asleep. What I meant was use Round Sync / Syncthing to copy files to pc and then use Kopia to backup. Round Sync can do one direction copying, so source files are not corrupted.

- 0 Posts

- 71 Comments

First and foremost Syncthing is not a ‘backup’ utility. Using it for backup is not at all recommended. Especially if you are dealing with Android or Raspberry pi, because the way clock / time works in these systems are pretty weird and create sync conflicts. So don’t.

Now to the solution. For backup, use a proper backup solution like Kopia. Modern solutions support browsing the snapshots created as backups. Also creating periodic snapshots ensures better redundancy and better chance for disaster recovery.

Now if you will not use it for backup, take a look at ‘Round Sync’ available in F-Droid. It’s an application built around the execptionally good app, ‘rclone’. It is some what similar to Syncthing, but designed in a very different way. Also it is more difficult to configure to copy the files to PC.

I also wanted to mention that I have used Syncthing for many heavy lifting jobs and never faced issues with it. It is a feature complete app, with the philosophy of doing only one thing and doing it perfectly. So if you run into any issues, do reach out to forums or devs. They will definitely help you.

14·2 months ago

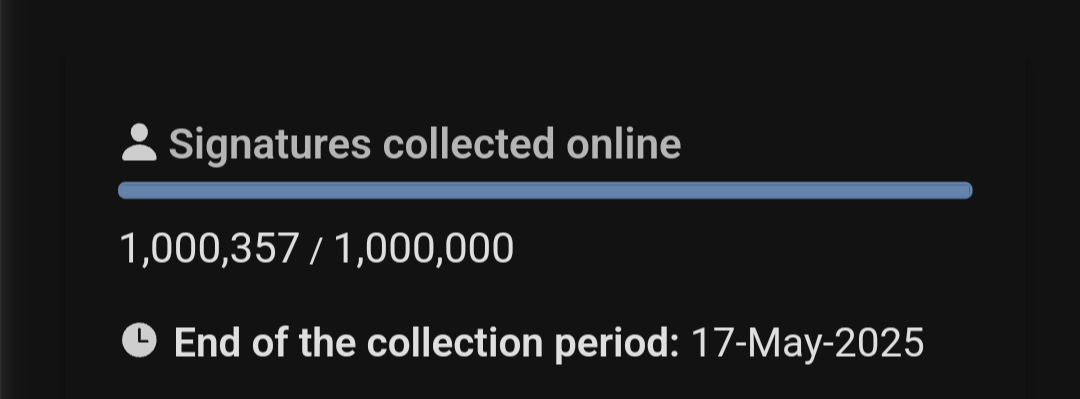

14·2 months agoThe organisation behind the initiative is from France.

Try

docker run liquidtrees:micro-algae

Haa understood. In that perspective yes it is not simple. I would also be happy if

pacmanhad better support for AUR.But I have a different perspective on this. I always look for the right or the best tool available to do something. So I’m not that hesitant to use another tool for AUR. I guess it’s a personal preference after all.

doesn’t

yaysimplifies the AUR installation? Things have been pretty easy for me after I started usingyay

5·5 months ago

5·5 months agoIIRC it’s just a clone of Gitea. Default interface is Chinese. Why would a non chinese person go there when Codeberg and Forgejo is available in English?

8·5 months ago

8·5 months agoI know the developer of this website. It’s hard paywall. You won’t be able to bypass it by modifying the elements in any sense.

As you mentioned in some other comments, only the currently archived ones will be available. If you are looking for just this specific article, let me know, I will archive and share the link here. If you are looking for a method to bypass it entirely, I don’t think it’s possible.

Also I assume you are not in a position to afford the subscription. It’s fairly decent considering the quality of articles. So whenever you can afford it, subscribe and support them. Nowadays good long form articles are non existent in Indian media.

1·5 months ago

1·5 months agoHey thanks for the detailed explanations.

Regarding SSO my concern is not the ChartDB team having my email, that I was planning to give anyway, but SSO provider knowing I’m using this tool. It’s a personal paranoia than anything else. I know it’s much safer and easier to have 3rd party SSO than managing your own authentication service. Done that, hated it. So not really that annoyed about it.

The Mac only part really annoyed me to the core. Then I received an email telling about Buckle, which amplified it. I think the problem is you advertise it as ‘ChartDB 2.0’ and then suddenly it becomes ‘Buckle’. I can understand the thought process behind such presentations, but really don’t like it. TBH I would not be this mad if a similar tactic was used by a large corporation. But being an open source tool I have some ethical issues with these practices.

Again, I know these are just some inconveniences, and not a scandal. So not really complaining, but just sharing how I felt. Apologies if that has been rude.

21·5 months ago

21·5 months agoI have some critical comments not about the tool, but about another related stuff. Please look at the next section for doubts and suggestions.

The tool need to be more clear and less annoying about ChartDB v2.0.

So this is shown as some big rework, so I signed up. Only then it said only MacOS would support it initially. This was a huge disappointment and I felt kind of deceived into giving my email id I don’t remember seeing it’s only mac at the beginning on the sign up page. May be I missed.

Another thing is it looks like a different product altogether with the name ‘Buckle’. Again I didn’t see it in the sign up section. Why a new product name? Are you switching to freemium model?

Last but most importantly, why does a third party sign up for it? If you just want to inform the user when things are ready, just take the email and use it. No need to have access to my Google or GitHub account.

Apart from this terrible experience, I love the tool and how it visualises it.

I have a doubt. When I visualised my postgres db it also showed the views I had, apart from the tables. But I could not find an option to add a new view from the interface, so that I can use it as a more capable design tool. Is it a feature in the pipeline?

Also I could not find an option to set the length of Type: varchar. I remember seeing this feature in other design tools.

3·7 months ago

3·7 months agoSorry for the delay. I got busy. I’m not entirely sure this is a dynamic registration issue. Your screenshot points to something like a permission issue. This is a bit wild guess with very limited information.

Do you have any info saved when you attempted to register the client manually and use client id and secret?

I will try to do some tests when I get to my setup. Do ping me if you have any updates.

2·7 months ago

2·7 months agoOh, so no separate config is used and only env variables I guess. Is it possible for you to get the URL your app is requesting? If yes, please share a sample.

Also double check the realm name. I assume you created a new realm for your use and not using master.

2·7 months ago

2·7 months agoHi, I have some experience with Keycloak. So I assume

you explicitly enabledyou are using OIDC dynamic registration.Can you share the config file after redacting sensitive contents?

1·8 months ago

1·8 months agoOh, never heard of amnezia. Never needed actually. But it looks like a good improvement on Wireguard. I will need a separate setup to test it out and currently I’m away from home with no clue when I will return. If I happen to find anything, I will definitely ping you.

In the HN page you linked many people mentioned v2ray. Have you tried that? How good is it?

7·8 months ago

7·8 months agoHave you considered having Headscale on a cheap VPS? We are actually doing that and it is pretty capable. IIRC, you can configure not to use the tailscale servers at all, and use your own public VPS for coordination. Bonus point, tailscale hired the Headscale developer and maintainer, and they are allowed to work on Headscale while on their payroll. The team looks very much into FOSS.

2·8 months ago

2·8 months agoI would say exactly that is what you have to describe. As I said certain things cannot be changed with therapy. It can only help you to get in terms with it.

Regarding the last point you mentioned. You are not giving up on her. Exerting constant pressure can’t change certain realities. It is like thinking you can drain an ocean with a bucket and a lot of time.

You have to accept that there is nothing ‘wrong’ with your partner. If she is asexual there is nothing to ‘cure’. You must build your life around this fact to be happy.

This does not mean that your needs should be discarded. In the same way you accept and respect the fact that she is asexual, she also has to take a mature stand and work on finding common grounds or compromises. That is how relationships work, isn’t it?

You start therapy. Remember that you will need to find a suitable therapist. So don’t hesitate to change therapists until you find one you are comfortable with. Maybe the therapist can help you on how this topic needs to be discussed with your partner. That may slowly open up new ways to improve the conditions.

3·8 months ago

3·8 months agoNo no… Don’t blame yourself. You did nothing wrong here. Very scientifically speaking we still have no clear answer on how the sexuality of a person is determined. So far there is a consensus that there is a biological factor also in play.

It is not your failure as a partner. These are things beyond your control. She also can’t do much on this. Therapy won’t change the underlying reality. It will just help you to cope up with the hard realities that you are facing.

I highly recommend you take individual therapy if you haven’t done so far. You may have to untangle decades of experiences to get in terms with it. It’s never late, and the right therapist will definitely improve how you handle this.

2·8 months ago

2·8 months agoOh so sorry. I didn’t realise you were talking about the situation you are in. I thought the first comment was just a thought experiment. I didn’t pay enough attention. My bad.

In your case I guess she can be in the asexual part of the spectrum. One of my friends is facing a similar situation. The partner has no sex drive at all. But the partner is a great person in every other area. That relationship sustained because my friend also has a lower sex drive, but more than what the partner has.

Since this has been so long, I assume you have already tried the couple therapy and individual therapy. If not that is one thing you can try out.

But keep in mind that if your partner is really asexual, there isn’t much that you can do. It’s not their fault in any way. So either you have to accept the situation and build a life around this fact, or you have to move on. Since you have been in the relationship for a long time, I guess everything else is going well. Means you have already chosen the first option.

It didn’t work like that for me. I must admit I didn’t dig deep to clearly see what is the problem. So my setup had a Windows Pc, a Raspberry Pi 5, and an Android phone, sharing a folder which had notes.

Whenever I save any changes in Windows machine, the android used gets updated without much issue, but the Raspberry Pi caused conflicts. When looked at the time stamps they were different and it looked to me like the Raspberry Pi 5 Syncthing is sending the old file as new one, because of the save time.

It read somewhere the issue is with how time is handled in Rasberry Pi. So I disabled the Raspberry Pi Syncthing and went on, because that was not really needed.